ISO/OSI in depth: Network vs. Transport

ON THIS PAGE: ISO/OSI in depth: Network vs. Transport

-

ISO/OSI Reference Model

-

Figure 2: Functions of the Host and Node

-

Transport Headers that are Encrypted

-

Interfering with the Transport Protocol

-

Carriage vs. Content

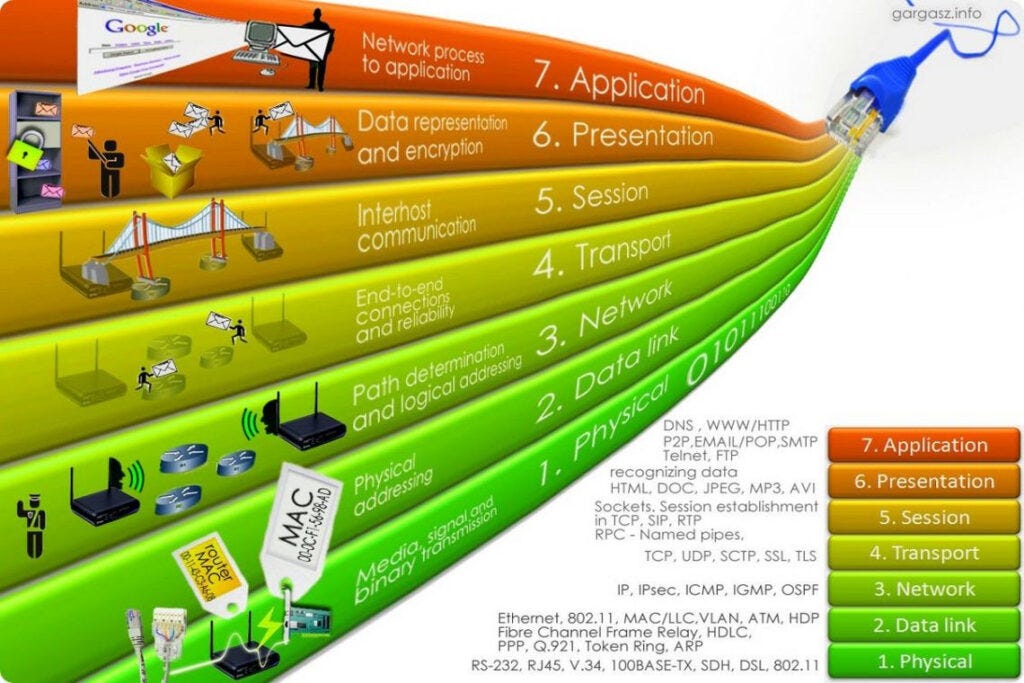

The ISO/OSI is called "stacked" protocol model is one of the most fundamental techniques in network architecture. This model was created in the late 1970s as part of a larger attempt to create general networking principles and methods.

The Basic Reference Model for Open Systems Interconnection, also known as the Open Systems Interconnection Reference Model or the "OSI model," was established in 1983 when the CCITT and ISO combined their efforts. This architecture provided a seven-layer abstract networking model that described standard behaviours for the overall network functionality as well as the network's various components.

ISO/OSI Reference Model[ps2id id='ISO/OSI Reference Model' target=''/]

The functionality was divided into two sections in the model:

The media layers handle binary data encoding through physical communication media, the data connection layer identifies data frames used by two interconnected "nodes," and the network layer handles a multi-node network, including addressing and routing behaviours that manage data transmission between attached hosts.

The server layers are concerned with end-host roles.

Data segmentation into packets, end-to-end flow management, packet error recovery, and multiplexing are all performed by the transport layer. The session layer, presentation layer, and application itself are the layers above the transport layer in the OSI model.

The interior nodes use only the network layer to make forwarding decisions on each packet that is managed in a model of a network as a series of interior nodes and a group of connected hosts, whilst the hosts use the transport layer to control the data flow between interacting hosts.

Read More: Implementing EIGRP Routing Protocol: Explanation and Tutorial

This model implies that there is a clear distinction between node and host roles, as well as a clear distinction between the data that they need to execute their functions. Nodes do not need to know about the transport layer settings, and hosts do not need to know about the network layer settings (Figure 2)

Figure 2: Functions of the Host and Node[ps2id id='Figure 2: Functions of the Host and Node' target=''/]

The network layer function is encoded as the IP header of a data packet in the Internet Protocol Suite, and the transport layer function is encoded as the Transport header, which is usually either a TCP or a UDP header (although other headers are also defined by IP). A packet should be deliverable over the Internet regardless of the transmission header added to it, according to the Internet architecture model.

As a result, the importance you enter in the IP protocol area in IP packet headers shouldn't matter in the least. It has little to do with the network!

Note that the extension header fields in IPv6 are exactly the same. However, for whatever reason, IPv6 has scrambled the egg, and some extension headers, such as the Hop-by-Hop and Routing extension headers, are addressed to network components. The majority, on the other hand, seems to be addressed to the destination host. If Extension Headers were specified solely as host (or destination) extensions, IPv6 networks should ignore them, while hosts should ignore them if they were meant to be network choices. Maybe it's one of those places where philosophy and reality aren't all on the same page.

The Protocol area in the IPv4 packet header could never have been included in the IPv4 header in the first place, in a strict sense. In principle, the network is unconcerned with the transport protocol that the transmitting hosts use. This also ensures that if the two transmitting hosts want to hide the transport protocol control settings from the network, the network may not be affected in the least.

It continues to matter a lot in today's public Internet that the transport protocol header is available to the network. In reality, not only should the transport protocol be accessible to the network, but the transport protocol that the hosts want is also important to the network. This is because many components of today's network not only look at the transport headers of the packets they transport, but rather depend on the details contained in the transport header. Network Address Translators, Equal Cost Multi-Path load balancers, and Quality of Service policy engines, to name a handful, are examples of this dependency.

In order to make clear judgments regarding packet handling for all packets within a single transport flow, these network functions make assumptions about the visibility of transport headers in IP packets. Often, these network functions go a step further and just process packets with the well-known transport headers (typically TCP and UDP) and ignore everything else.

It's also gone too far that today's rule of thumb is that unfragmented IP packets with a TCP transport header containing one end using port 443, and unfragmented IP packets with a UDP transport header containing one end using port 53, have the better probability of bringing their data payload through to the expected target. Any attempt to expand this extraordinarily limited range of packet profiles raises the risk of network-based connectivity interruption.

Transport Headers that are Encrypted[ps2id id='Transport Headers that are Encrypted' target=''/]

Why are we still exploring the possibility of encrypting transport protocols to render all transport headers invisible to the network if using a novel transport protocol in the public Internet is a self-limiting action?

"Edward Snowden" is one possibility. In addition to these findings about widespread surveillance [RFC 7624], the Internet Engineering Task Force (IETF) took a "like for like" approach and came to the conclusion that "Pervasive Monitoring is an Assault" [RFC 7258].

The general solution to this kind of deceptive assault has been to raise the amount of protection of Internet traffic in order to make network-based monitoring more difficult. Not only does this IETF answer include the usage of TLS to encrypt session payloads on the Internet wherever possible, as well as shifting device behaviour profiles to make this the default operation, but it also covers other areas of Internet communication where trust model breach was assumed to be a problem.

The DNS protocol's behaviour, as well as the transmission of transport protocol headers, have been included in this IETF universal obfuscation effort. We've come a long way from the days where hosts were unable to execute encryption functions. Robust encryption is no longer a luxury choice of restricted use, but now something that any consumer can fairly plan to use as a minimum requirement. The control meta-data is just as critical as the data itself if the goal is to restrict knowledge leakage across all facets of the Internet's communications system. The usage of anonymity in transport header fields will help to protect users' privacy and prevent such attacks or packet misuse by network devices [RFC 8404].

However, I believe that the issue of privacy is just one aspect of the tale. Although obscuring host functions from the network is a direction that certain segments of the Internet ecosystem are aggressively following today through transport header encryption, it might not be at the heart of why these efforts to encrypt Internet traffic play to a common fear of the security state running in a relatively unregulated manner.

It's unclear what the goal is here, and intentionally obscuring one part of the mechanism from another, as in many interdependent complicated structures, has both advantages and drawbacks. RFC 8546, titled "The Wire Image of a Network Protocol," was recently released by the IAB (April 2019). By today's RFC guidelines, it's a brief text (9 pages), but brevity does not always mean transparency.

This paper seems to have cloaked whatever meaning it was trying to express in such a thick layer of vague terms that it has managed to say nothing useful! The IAB document seems to have been prompted by a lengthy debate in the QUIC Working Group about the use of the visible spin bit in the QUIC transport protocol (see a previous article on this subject), and I suspect it began as an attempt to argue for some kind of transport behaviour visibility to the network, but the IAB's predictions on the topic end up offering little in the way of solutions.

The IAB isn't known for being a prolific commentator, but any matter that prompts an IAB answer, no matter how obscure the response might be, indicates that the subject is of general interest rather than a rather esoteric quarrel hidden deep within the design of a specific procedure.

Read More: CCNA Routing and Switching 200-125 Certification: A Perfect Guide Summary.

Since encrypted transport headers is a transportation matter, it's normal to wonder whether the IETF's Transport Area can do a better job than the IAB of offering a simple and detailed explanation of the issues. The Internet Engineering Task Force's Transport Area Working Group has completed its analysis of a draught on this subject, and the internet draught "Considerations about Transport Header Confidentiality, Network Operations, and the Evolution of Internet Transport Protocols" is now in the RFC Editor Queue.

"This article addresses the potential effect when network traffic uses a protocol with an encrypted transport header," according to the document's introduction. It raises questions to think about while developing new transport protocols or functionality."

This paper seems to be an attempt to include a more realistic commentary on header encryption than the previous IAB effort.

It's clear that at 49 words, this extended commentary isn't going to be a quick read, but can it improve the consistency of the points being discussed?

The paper begins with several justifications for the network's usage of header content. In such situations, they cite the condition of link aggregation and the issue of packet re-ordering. To obtain a more granular image of a traffic flow than that which can be obtained from source and destination IP address pairs, the network can usually peer down into the transport header.

For the IPv6 Flow name, it's the IPv4 proxy. (Despite the fact that the IPv6 Flow Label field's intended function is so muddled, it's difficult to see how the IPv6 Flow Label field is useful in any case!)

Under the guise of "Quality of Operation," the document mentions differential service attempts that aim to cause selective traffic flow disruption (That "Quality" label always seems to me to have an Orwellian connotation, and a more honest label would be "Selective Service Degradation," or even just "Carriage Standover Services").

The paper also lists the different types of network analysis that network operators may do with transport-level data, such as traffic profile analysis, latency and jitter, and packet loss. The document, on the other hand, strikes me as offering a dubious collection of rationales.

It reminds me of a voice telephony operator defending its eavesdropping on phone calls by claiming that the intelligence collected, or in other terms, details of what people are doing to each other through a telephone call, may be used to improve the telephone network! The paper also invokes a nebulous definition of "availability," arguing that if network operators were no longer allowed to eavesdrop on the transport parameters of active sessions, their capacity to operate a stable network will be undermined in some unspecified way.

Clearly, none of the rationales raised in this paper will survive a near examination.

In its review, it often tends to adopt a privacy-oriented approach. The privacy point, on the other hand, seems to be simply an implicit justification for a more significant conflict in opinion between material and carriage. To a large degree, the problem from the application's viewpoint is that network operators' attempts to conduct "traffic grooming" by transport header modification leads to nothing more than causing harm to application data flows, resulting in the network's carriage efficiency being lowered.

And it is here where we can search for the true problems between networks and hosts in today's internet: the use of networks to selectively degrade transport efficiency in the name of network service consistency.

Interfering with the Transport Protocol[ps2id id='Interfering with the Transport Protocol' target=''/]

To begin, we should consider the conflicts that exist between hosts and networks on the Internet.

In the world of telephones, the network provider was in charge of all traffic. You could either reserve a virtual circuit capable of passing a real-time voice conversation or a fixed capacity channel between two endpoints from the network. You couldn't travel quicker than the contracted pace if you chose one of these platforms, because if you went slower, you didn't free up popular capacity for anyone to use.

Clearly, the network paid extra for higher-capacity rentals. Much of that improved with the introduction of packet networks. Since there was little compliance on the network, different applications (or traffic flows) fought over the same communication ability. There was a challenge for networks that wished to manage the distribution of mutual general communications services to clients.

This was the impetus for a significant body of study in the 1990s and 2000s on the Internet about "Quality of Service" (or QoS). The network provider decided to provide a "better standard" service to certain customers and traffic profiles (undoubtedly for a fee). However, if a network has a set size, giving certain customers a greater slice of the network's capital necessarily implies giving others fewer. Although the network might interrupt a conversation session in a variety of ways to make it go slower, making a communication session go faster was even more difficult (or perhaps harder in certain cases).

This meant that if you were to give one kind of traffic flow special consideration, you might make all the others move slower! The aim was to free up some network bandwidth for sessions that were supposed to be prioritised to extend their sending windows to fill this newly freed space. So-called "Performance Enhancing Proxies" couldn't really render the chosen TCP sessions move faster.

Even then, they were able to slow down other TCP sessions, freeing up some bandwidth for the chosen sessions to provide a lower packet failure likelihood and thereby have a better data throughput limit. Dropping packets is one method of throttling sessions. Altering the TCP control parameters is a more subtle yet equally powerful process. The sender can easily throttle their sending rate if the offered TCP window size parameter is decreased.

Obviously, applications did not see this selective throttling of active TCP sessions by networks as a sympathetic gesture, and there have been two big responses from the device scale. For starters, a new congestion management algorithm has been implemented that is less susceptible to packet loss and more sensitive to improvements in the end-to-end bandwidth latency product across network routes.

Read More: Segment Routing is important for preparing the network for SDN!

This is the BBR TCP control protocol, which is a modern sender-side control algorithm for TCP. However, BBR is also vulnerable to on-path TCP window size abuse. Encrypted transport headers were a crucial goal in protecting the session from this kind of network intrusion. This is the second answer, which obfuscates the location of the TCP control details in the packet.

As previously said, you can't delete a transparent transport header from IP packets on the public Internet, and even encrypting the TCP header will almost certainly result in the same network drop answer. However, hosts may choose to disregard these transport header options. Although a visible transport header cannot be removed, it may be rendered useless by the host.

A "dummy" outer TCP wrapper may be used as fodder for networks that want to peer at transport and exploit session settings while covering the actual TCP access header within an encrypted payload. Apart from the observation that the TCP end hosts are unresponsive to abuse of their window parameters, there will be nothing in the way of a visible network signature that this is occurring.

However, the trouble with this method is that these days the programme is attempting to gain power over not only the transport session parameters from a meddling network, but also over the server on which the application is hosted. The programme may theoretically use "raw IP" interfaces into the platform's I/O routines, but in reality, this is almost impossible in implemented systems.

Platforms used in manufacturing processes have a tendency to be suspicious of applications. (Given the prevalence of ransomware, this degree of paranoia on the network is most likely justified.) Disabling all aspects of the platform's handling of transport protocols and passing ownership of the transport protocol from the kernel to the device space is a difficult task.

As a result, it makes sense to adopt QUIC's strategy, in which the shim wrapper uses UDP as a visible transport header and drives the TCP header into the IP packet's encrypted payload. In this situation, UDP is similar to optimal since it has no transport controls and only uses local port numbers.

Since it is a UDP session that uses TLS in too many respects, QUIC appears to the network as a UDP session that uses TLS-like session encryption. Since only the two applications at the "ends" of the QUIC transport will see end-to-end transport control parameters encoded in the end-to-end encrypted UDP payload, end-to-end TCP flow control is now truly an end-to-end flow. Control over UDP packets by the host platform is minimal, and the programme is then given full control over the session's transport activity.

Carriage vs. Content[ps2id id='Carriage vs. Content' target=''/]

Perhaps the move to opaque transport headers reflects more than just a need for apps to have more secure autonomous power. The QUIC change may be seen as service providers' retaliation against another round of a very familiar old game played by network operators to collect a levy from content providers by keeping their information traffic hostage, or, as it became called, a tussle about "network neutrality."

There have been occasions where network providers have introduced policies to throttle those types of traffic that they said were utilising their network in a "unfair" fashion in any way. The ambiguity in all of this is more likely due to the carriage operator's baser intention to extort a carriage toll from content distributors in a blunt sort of simple blackmail: "My network, my set of guidelines. You, the client, are the one who pays!"

Many carriage providers in this market, I think, believe they are the victims here as they let content providers walk away with all the profits.

In order to reclaim any of their missing income streams, they are seeking to reclaim a "equal share" of revenue by pressuring the content industry's behemoths to compensate a fair share of carriage costs. If, in the other hand, the extortion pressure is enforced by manipulating the transport control parameters of traffic passing through the carriage network (or, in other words, holding traffic hostage), then the obvious response is to encrypt the transport controls alongside the content to prevent certain on-the-fly traffic profile manipulation. And maybe this is a more convincing argument for why QUIC is so relevant.

If the conflicts between carriage and service are a tussle for primacy, it seems that the content folks are winning the upper hand. The content folks are withholding information from the carriage providers by encryption at any level in the host part of the protocol stack, even at the transport layer, that would enable the carriage provider to selectively discriminate and pit content providers against each other.

If the network's capabilities are restricted to completely encrypted UDP packet streams, one stream may seem to be identical to another, and selective discrimination will be impossible. If that isn't enough, padding and intentional packet variance will muddle any traffic profiling efforts.

However, when I say "stuff," I really mean "software," and when I say "apps," I really mean "browsers," because I'm really talking about Chrome, and by Chrome, I mean Google.

The massive dominance of cell traffic in the market, as well as the massive dominance of Android in the mobile device world, has a significant impact on this space. Given Google's inherent leverage of all mobile devices, as well as the majority browser platform in this room, it's difficult to see how the company might fail in this battle.

However, it's doubtful that if Google defeats the carriage companies in this fight, it'll be the end of the storey. It's highly likely that the carriage industry would follow the lead of traditional print media and go to politicians, arguing that Google's destruction of the business model for providing national communications infrastructure is detrimental to national interests, and that political intervention is needed to restore some balance to the market and allow the carriage market to be a viable option. To put it another way, since Google has effectively lost the remaining value contained carriage business, it can now compensate carriage operators to recover its profitability.

The technological aspects of encryption and data leakage, as well as all industry considerations of the feasibility of different business models, step out the door at this moment, and are replaced by a slew of lawyers and politicians. When we move through the myriad nebulous attempts by players to leave national markets, we can focus on the real question: "What is a tenable commercial partnership between carriage and content?"

Under such a politically charged setting, the options are either for the different business actors to negotiate and find an agreement that they will all live with, or for policymakers to enact a result that would almost certainly be much more disagreeable for all!

It should be entertaining to see this unfold over the next few years, regardless of the result. Remember to bring popcorn!

Comments

Post a Comment